Why 95% of AI Commentary Fails

In which I actually read the paper everyone is talking about.

First, A Depressing Example

A sad fact of the modern era is that whenever a scientific paper goes mini-viral, actually reading the paper almost invariably makes you feel like some kind of wild-eyed conspiracy theorist: you know the secret truth, while most of the chattering masses are deluded fools!

This is ~1000% so when the paper relates to a hot-button topic like AI. The most recent example is MIT NANDA’s “The State of AI in Business 2025,” much better known as the ‘95% of AI projects fail!’ paper. To listen to Axios, The Register, AI News citing the FT, and of course commentators on Reddit, Hacker News, and Bluesky, the takeaways from this paper are, respectively:

“95% of organizations studied get zero return on their AI investment.”

“95% of enterprise organizations have gotten zero return from their AI efforts.”

“Gen AI makes no financial difference in 95% of cases.”

“MIT report says 95% AI fails at enterprise.”

“95 per cent of organisations are getting zero return from AI according to MIT.”

(mostly unprintable triumphalism about GenAI’s imminent death)

This is a shame for several reasons, one of which is that it’s quite an interesting and nuanced paper. Its actual takeaways are:

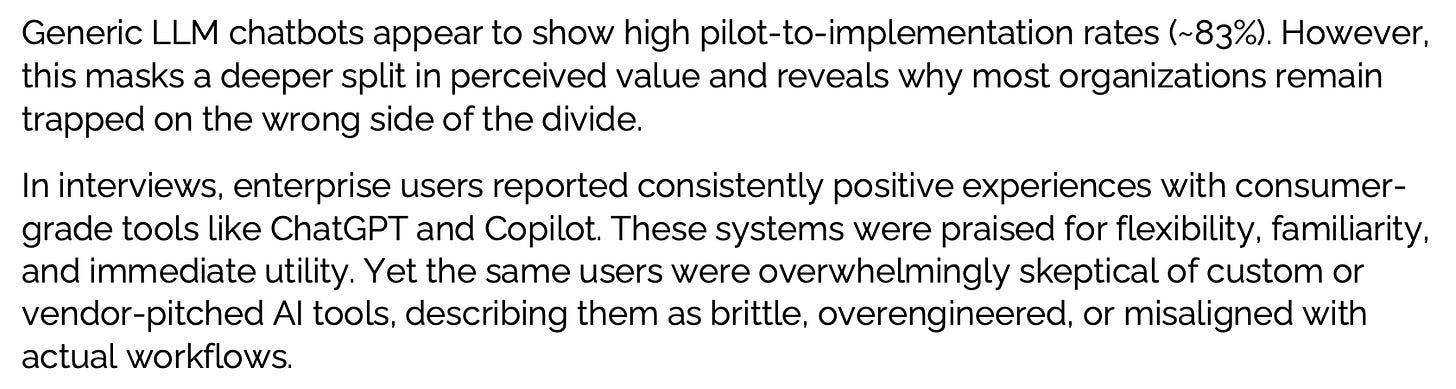

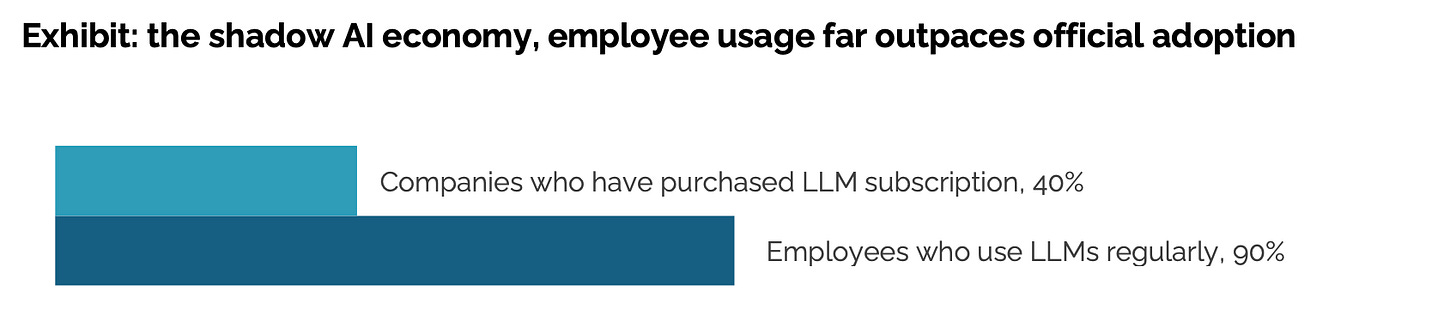

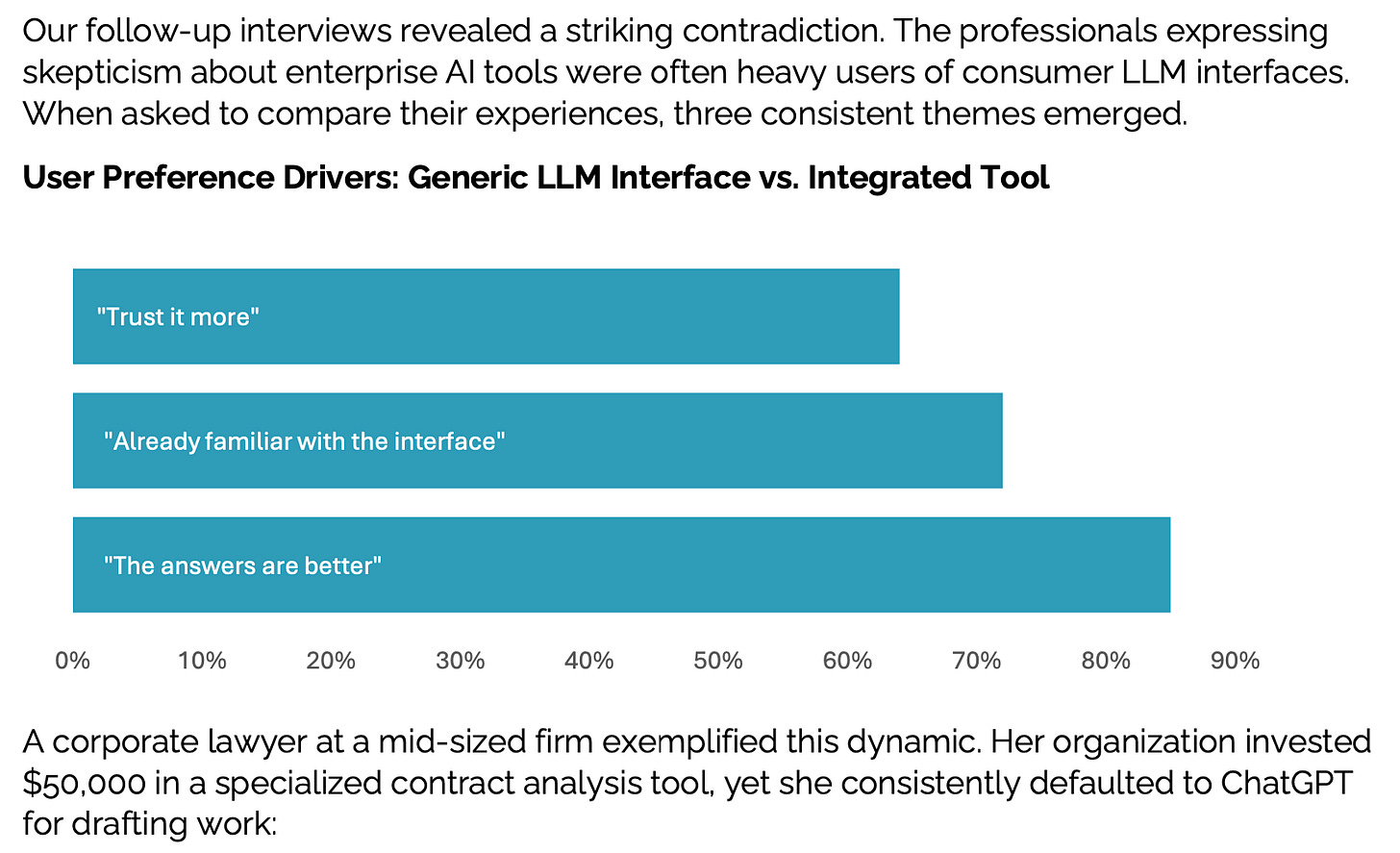

95% of custom-built internal tools that use AI in highly specific ways “fail,” i.e. never graduate from pilot projects to widespread implementation. (It’s a slightly wacky metric, measured in an equally wacky way, but let’s go with it.)

…but, by that same metric, standard LLM chatbots are highly successful, and partnerships with external vendors are quite successful.

Some relevant screenshots from the paper:

These are — to understate — not screenshots from a paper saying “95 per cent of organisations are getting zero return from AI.” Rather, this paper is wildly bullish for the frontier labs, indicating that

AI usage is already pervasive in enterprises everywhere

the lion’s share of it is still accruing to them, despite the efforts of enterprises to keep their tools (and data!) in-house.

…And yet it somehow got reported as, to quote Axios, “an existential risk for a market that's overly tied to the AI narrative.” See what I mean about feeling like a conspiracy theorist gleefully aware of the occult truth… just because you actually read the paper?

Second, On Possibly Making Things Less Depressing

I read freakishly fast, especially when skimming; that paper occupied ~5 minutes of my life. I understand that, for most people, reading the original paper is too much to ask. The whole point of this kind of journalism is accurate summarization (well, along with curation.)

…But you know what else reads freakishly fast, even faster than me, and is fantastically good at accurate summarization?

That’s right. GPT-5, and Claude, and Gemini. A very simple way to avoid being part of the credulous misled masses is this: whenever you read a story about a paper, run that paper and the story through a frontier model. Have it summarize the paper for you itself, and/or ask if the story has any howling errors or omissions. It’s easy, just copy their two URLs into the ChatGPT interface and add your instructions.

No, it will almost certainly not hallucinate. (Hallucinations usually come from models ‘misremembering' their pretraining; here, the paper and report will be in their context window, which they’re nowadays very good at recalling.) And the sad reality is that even in the unlikely event it does … I am confident it will do so much less than, say, modern human reporters.

“But where do I even find the paper?” Alas, that’s a better question than it should be. There is no excuse in this year 2025 for stories that don’t link to primary sources; and yet, most don’t, meaning they aren’t to be trusted. I give you Evans’s Epistemic Lemma: if they don’t link to primary sources, they aren’t reporting on them accurately.1

In practice you may still have to Google or Duck or search-engine-of-your-choice for the original paper. (That’s how I had to find the MIT NANDA one.) Sorry.

A good and less emotionally-charged example of this approach — which also shows that mere LLM usage is no panacea by itself! — is the recent Tunnels In Spaaaaace story. Here, Perplexity AI claims

Astronomers find 'tunnels' of hot plasma between star systems

Astronomers have confirmed the existence of mysterious "interstellar tunnels" of hot plasma connecting our Solar System to distant star systems, according to a study published in Astronomy & Astrophysics.

This came up in a group chat I’m in. To which I replied:

man this is such a sad example of how information percolates today:

Headline of article cited by Perplexity: "Astronomers discover mysterious 'interstellar tunnels' in space"

Actual article beneath the headline: "confirmed that the Sun sits inside a giant bubble of hot gas, and within it, there might be strange “interstellar tunnels” connecting us to other star systems."

Article does not link to original paper, because we wouldn't want that, would we. Google leads to several more pop sci articles ... zero of which link the original paper

Actual paper: "we found evidence of hot gas filling nearby channels that lack neutral material ... we report on [a whopping two possible such channels] ... On the other hand, there is the presence of channels of low NH that do not seem to be filled with soft X-ray-emitting plasma"

Siiiiiiiiiiiiiiiigh

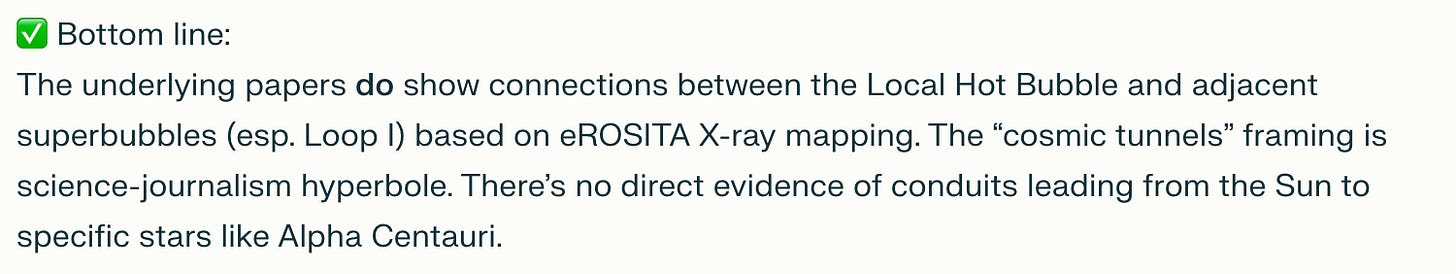

To the credit of the group chat, though, this led to a new and vastly epistemically improved Perplexity search:

Can you identify the research papers behind this story, enumerate the major claims made in the story, and flag each as supported or not supported by the real papers? If the answer is ambiguous state so and why.

I won’t link the results because they’re connected to the searcher. But they’re excellent:

Now that’s what I call quality modern journalism!

Third, On Epistemics In General

In general we need better epistemic lenses through which we view the world, and LLMs can help construct such lenses. (See also my epic 5,000-word rant from last year on What’s Wrong With Journalism.)

That was a common throughline of my professional life from 2023-25, when I worked at Metaculus, where AFAIK I was the first person to propose that LLMs could be good at forecasting2 and the first to propose an AI Forecasting benchmark; my short-lived startup Dispatch AI; and FutureSearch, which is all about AI epistemics. It’s still a factor in my professional life, in that I’m now at Meta Superintelligence, an organization which attracts a lot of journalistic attention, and … well, let’s just say the Gell-Mann amnesia effect has been on my mind on a near-daily basis.

LLM-optimized epistemics aren’t necessarily about more or better intelligence, bur more or better attention … and much much much less confirmation bias. Of course it is not good that an LLM posing as “Margaux Blanchard” successfully wrote articles for Wired and Business Insider. But it does highlight that a less deceptive, more thoughtful approach to LLM-powered journalism would be no worse than much of the purely artisanal (and thus prone to entire genres of its own deep flaws!) work out there today. Maybe, by which I mean almost certainly, journalism is yet another field that would benefit enormously from the combination of human and artificial intelligences.

“But don’t major news organizations like the New York Times often fail to link to primary sources?” Why, yes. Yes, that is correct.

In fairness this was largely because I got a sneak peak at GPT-4 months before it shipped.