Walter and other stories

An August grab bag of delights. Well, divertissements.

My friend Rohit Krishnan and I wrote a pretty cool reinforcement learning paper, if I do say so myself! I don’t want to belabor Rohit’s writeup other than to tell you to go read it: but, very briefly, we (mostly he) taught a small LLM how to be good at Bluesky, in a way that generalizes to all kinds of real-world problems where it’s hard to judge what’s good, and then found we (he) had independently discovered the bones of an approach also used by DeepMind to train Gemini 2.5, so that’s cool, but apparently used some actually new techniques, which is even cooler.

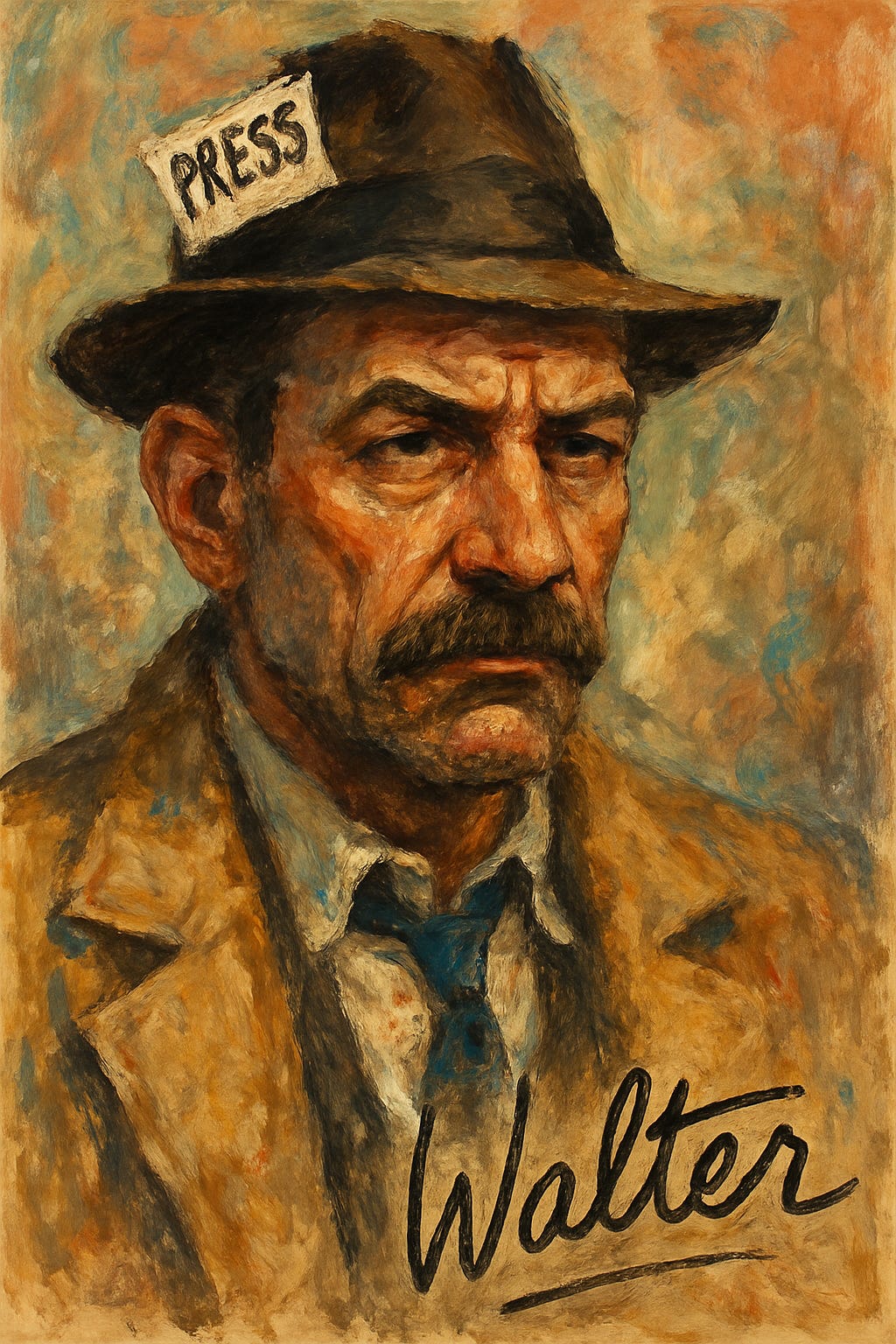

We named it Walter, after Bagehot and Cronkite, because the original idea was to have it write not just high-quality skeets but actual high-quality articles. (No, not just in terms of engagement, also thoughtfulness and the quality of conversations provoked.) That was a little aspirational … but AI approaches to improving the epistemic quality of journalism remain a) a personal interest b) direly, desperately needed. More again on that in the near future I expect.

Rohit is so so the first author on this paper, btw. My contribution was basically:writing the code to harvest the training data from Bluesky over the first half of this year. (Shoutout to Bluesky for having quite a decent and accessible API available to anyone, even if its documentation could use some love.)

serving in the crucial role of Official Rubber Duck.

Clarifying that last in part because last month I started working at Meta Superintelligence, with the gloriously murky job title of “Solutions Architect,” which is why my publication frequency has diminished of late. It’s a super interesting gig, obviously I can’t really talk about it, and I mention it only to make it clear that anything I write here about AI, or anything else, will be so very extremely not any kind of official Meta position. (I probably won’t even know the official Meta position most of the time.)

This is actually my third arXiv AI paper this year, after the two submitted by my (fondly remembered) former employers FutureSearch on which my name appears: Deep Research Bench and Bench to the Future.

…Actually I’m pretty sure those aren’t just my three research papers of this year, but my three research papers of this life. Which may sound odd from a guy who has written nine books and had a long and diverse STEM career, but academic publishing has never previously appealed to me. I couldn’t wait to leave school once I had my EE degree. It seems interesting / instructive that both working and playing in modern AI seem to inevitably lead you to and through the arXiv.More generally the culture of the AI industry is far more influenced by academia than any other branch of software. It’s interesting how technologies develop their own unique and idiosyncratic cultures. Cryptocurrencies inevitable come with “white papers” because Satoshi set the cultural tone for Bitcoin and everyone has since followed. (Here’s a database with more than 3,000 such “crypto whitepapers.”) AI has a history of open research and publication because, during the long AI winter of the mid-80s through mid-00s, academia was the one place its guttering flame was kept alive.

If a technology gets big enough and important enough, it actually develops multiple cultures, and culture wars: open source began as something of an insurgency, grew to became an empire … and like all such empires, compromised some (though not all) of its initial core values en route. I’d be interested to know if these cultures that develop are pure path dependence or are actually inherent to technologies. For instance, it makes sense and was maybe inevitable that OSS grew in software and especially web software, which can be relatively easily remixed and interconnected, compared to, say, hardware microcontrollers. Was AI’s academic bent inevitable, though? My guess is no.Anyway reinforcement learning from non-verified real-world rewards is really cool and absolutely the future! You heard it here Nth. But that is probably not currently the official Meta position. Don’t ask me, I wouldn’t know.

Here’s DALL-E’s impression of Walter. Wow, imagegen has gotten good.